The Evolution of the GPU

2025-07-31

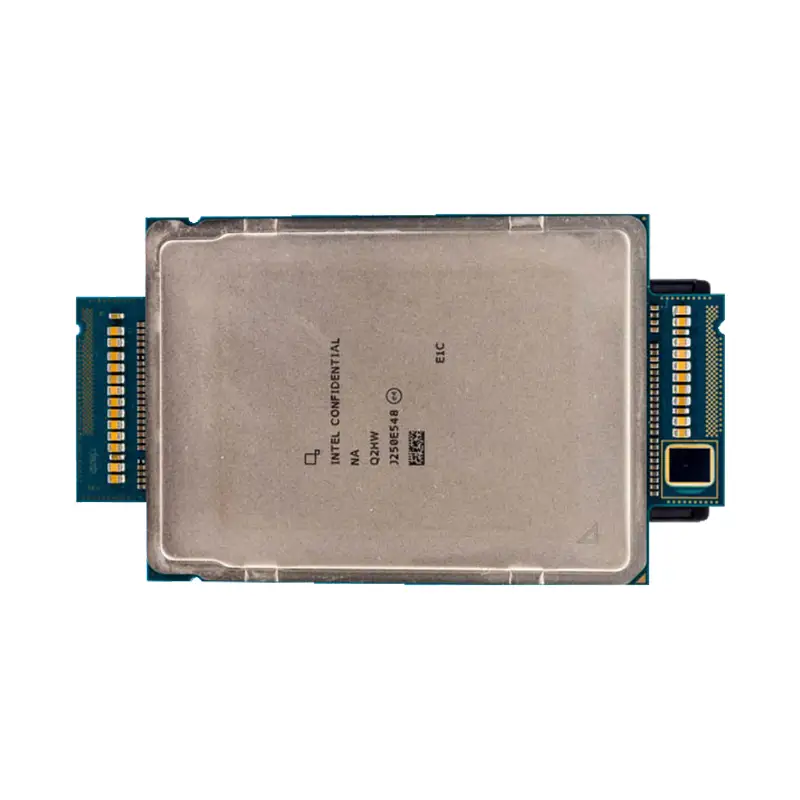

Remember the pixelated screen of Super Mario when you were a kid? Back then, computer graphics cards struggled to even handle basic graphics processing. Who could have imagined that 30 years later, these gadgets would not only allow you to drift in 4K, but also help scientists predict climate change, doctors analyze CT scans, and even power the recently popular AI painting? Today, let's discuss how the GPU, or "graphics processing unit," evolved from a gaming accessory to the core hardware of the digital age.

1995 was a watershed year. When NVIDIA was founded, graphics cards were merely translators, converting computer signals into television images. It wasn't until the release of the GeForce 256 in 1999 that the concept of the graphics processing unit (GPU) was officially introduced. It was like a kindergartener who had just learned to draw a circle, but it already made the zombies in Half-Life look less like paper figures.

A major event occurred in 2006: the birth of the CUDA architecture. This was like giving the GPU a "hack," transforming it from a mere "artist" capable of drawing to a "student" capable of solving math problems. No one could have imagined at the time that this design, originally intended to better render the water effects in "Crysis," would later become the golden formula for AI computing.

2012 was quite interesting. That year, the three masters of deep learning used two GTX 580 graphics cards to train the industry-shaking AlexNet, which outperformed traditional algorithms in image recognition competitions. This was like giving GPUs a "potential stock" certificate: it turns out they can not only play games but also serve as the "brains" of artificial intelligence.

In recent years, the situation has become even more exaggerated. The Ampere architecture graphics cards released in 2020 have a computing power equivalent to 1,000 times that of top-tier graphics cards in 2000. If you go to the hospital for a CT scan these days, the AI-assisted diagnosis system may be driven by dozens of GPUs working frantically. Weather forecasts, accurate down to the street level, are also thanks to their ability to process massive amounts of data. Not to mention the recently popular ChatGPT, whose training required tens of thousands of GPUs working tirelessly day and night.

Looking back now, the development of GPUs is like a tech geek's comeback story. From the initial "little brother" looked down upon by the CPU to its current position as the "core engine" driving the digital revolution, every step in between has been filled with surprises and joy. The next time you see a lifelike AI-generated image or a foreign language news report translated in seconds, remember to give a thumbs up to the GPU chips working silently—they are the true "unsung heroes" of the digital world.

As a professional manufacturer and supplier, we provide high-quality products. If you are interested in our products or have any questions, please feel free to contact us.